ChatGPT and the Pareto Principle

I still remember the conference call with Microsoft about two decades ago. I was head of engineering for the Advanced Technology Group at Sonic Solutions, at the time one of the few companies that had a fully functioning software DVD player, and Microsoft was interested in integrating our code into the next version of Windows. The call didn’t go as well as we anticipated, because one of Microsoft’s most senior engineers had built a first version of his own DVD player over the weekend, and was wondering why his team would even need Sonic. “Give them a few more weeks and they’ll be back,” our CTO stated confidently after the call. Indeed, they were back a few weeks later after realizing that, as usual, the devil was in the details: no Windows customer would want their DVD player to crash in the middle of playing a movie – so it needed to handle essentially 100% of the content of every DVD video disc ever released - even those with authoring bugs in them. Sonic’s offering was not only a great implementation of the DVD specification, but also an extensive collection of workarounds for questionable DVD implementations that nevertheless made it to market. If I remember correctly, Disney’s Snow White was a notorious test case in that regard.

The Pareto Principle: The Remaining 20% of Functionality Takes Forever

The above story makes a good illustration of the Pareto Principle(also known as the “80/20 rule”); its application is nearly limitless across many industries. I like to apply a version of the rule to software engineering projects:

80% of the product functionality takes about 20% of the total development time. The other 20% of functionality will take 80% of the project's development time.

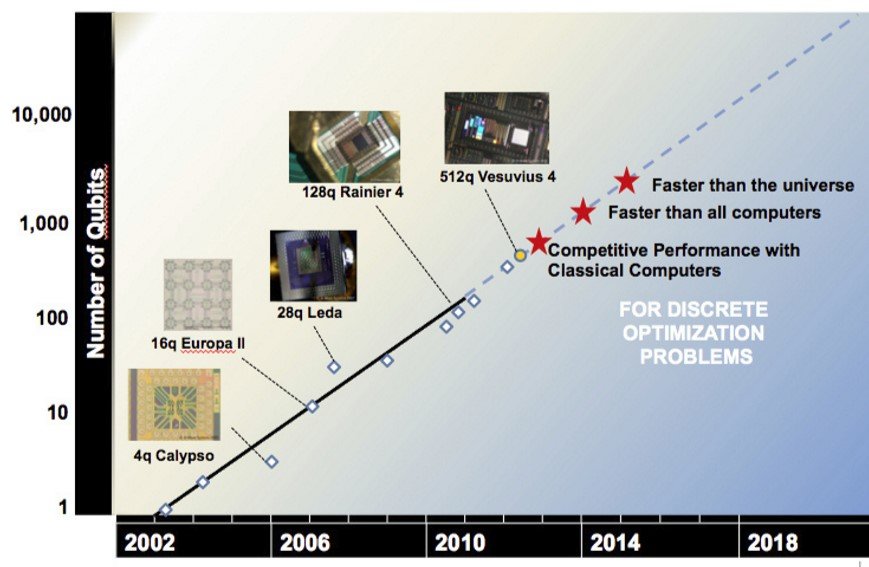

In other words, it’s quite easy to demonstrate significant initial progress in a software project, but then it takes multiples of that initial time period to turn a project into a high quality product. We can quibble about the exact percentages here, but the general concept can be applied to most engineering fields, especially Artificial Intelligence. We are initially amazed when an “80%” product is released but that soon turns to frustration as the remaining 20% of functionality (or accuracy) dribbles out slowly or is never delivered! For example, when Siri was first released on the iPhone 4S in 2011, it seemed like magic. Twelve years later, the technology has only gotten marginally better, and we’ve largely given up talking to our phone on a regular basis because it is so frustrating to correct Siri’s mistakes. And for another example, back in 2015, Google’s Chris Urmson famously declared that one of the goals of his work in building a self-driving car is to prevent his young son from obtaining a driver’s license. Well, his son is now 19 years old and either getting quite sick of hailing an Uber, or waiting in line at the DMV like the rest of us.

ChatGPT: 80% Accurate?

ChatGPT is the most current illustration of the 80/20 rule. When it was released late last year, we were mesmerized by its intelligence and ability to carry on conversations. But now that the initial excitement has worn off, we are beginning to hear stories on the technology’s yet unresolved flaws – a key reason Google decided to delay its version until competitor Microsoft forced its hand, which then did not go so well.

Many knowledge workers are asking themselves, “Will ChatGPT replace me?” This includes even software engineers, as ChatGPT can generate working code! Well, if we apply the Pareto Principle we might conclude that this is unlikely in the near or medium term. But a better question may be, “Will a human using ChatGPT replace me?” If you substitute the word ChatGPT with other (previously ground-breaking) technologies, such as a telephone, calculator, computer, or the internet, I think you will have your answer quite quickly.

What Can We Learn from SVB?

No update from Silicon Valley would be complete this week without a comment on the Silicon Valley Bank fiasco. There is no shortage of pundits casting blame, but what stands out most for me is the overall speed of SVB’s demise, fed by a sense of panic spread through social media. But what is the root problem of that panic? As WSJ columnist Daniel Henninger recently observed, “the problem is the rise and spread of our own unmediated human credulousness on an unprecedented scale.” This is something we all need to fix – especially as the new set of generative AIs come online - with 80% accuracy ; - ).

Visit our Blog Archive: