Artificial Intelligence: Back to the Future?

We are at the peak of the third hype cycle since Artificial Intelligence research was founded in the late 1950s by Marvin Minsky, Herbert Simon, Allen Newell, and others. Will this cycle crash like the two before it, bringing a period of “AI Winter”? Or have we entered a permanent new era of computing?

“Machines will be capable, within twenty years, of doing any work a man can do.” Herbert Simon, 1965

“My 11-year-old son should never need a driver’s license.” Chris Urmson, 2015

My first job out of university was joining the AI Technology Center at IBM in the late 1980s. AI had emerged from its first winter, and building rule-based (expert) systems was all the rage. I built such systems for Union Pacific to route its railroad cars and Swissair to schedule gates at Zurich airport. Back then, I was a Knowledge Engineer, meeting with human experts for interviews, and then adapting this information into a data model as well as a set of rules that the system would execute. Expert systems never became a commercial success – human decision making is not fully driven by rules that can be written down, but a large part is played by instinct or “gut feeling”.

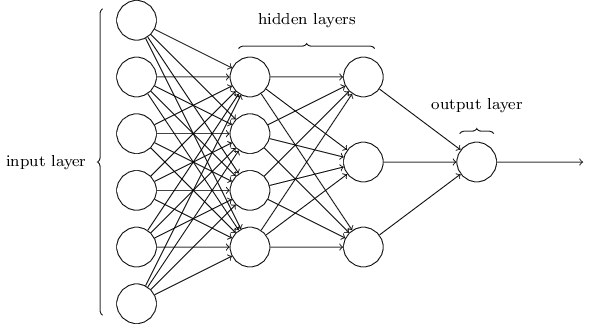

So, a second winter period set in while AI researchers went back to the drawing board. Actually, the prevalent form of AI in use today already existed back then, but computers were not powerful enough to process them. Neural networks had been invented to closer mimic the “gut feeling” component to human decision making – learning to recognize patterns in the web of data we are exposed to, and, based on prior experience, make the decision that feels optimal. Neural networks require a lot of processing to be performed in parallel, which is a challenge for normal computers as their central processing unit (CPU) is designed to perform one calculation at a time, serially.

Enter the GPU

Hardware finally caught up in the 21st century, driven by the computer gaming industry’s demand for high performance graphics. The challenge of figuring out how each pixel should be lit for a few hundredth of a second on a 1920 x 1080 pixel computer screen requires massive parallel computations. New chip architectures were invented to do this, and high performance gaming computers arrived with powerful Graphic Processing Units (GPUs) in addition to the traditional CPUs that handled the remaining programming tasks.

It turns out that these GPUs are also perfect for processing neural networks. It should be no surprise that NVidia, a company founded in 1999 to provide high performance GPU chips for the computer gaming market is now calling itself “the AI computing company.”

Big Data

Neural Networks require exposure to a diverse set of real-life scenarios so they have an insatiable demand for training data. It is all about pattern recognition. If the pattern hasn’t been seen before, the network can fail drastically and ridiculously. Fortunately, a second computing trend emerged in the 21st century – new storage hardware and architectures that allow businesses to store massive amounts of raw data. Where once a retailer like Macy’s may have stored a simple list of current-year customer transactions in an SQL database, eBay uses a 40 PB Hadoop cluster to track far more variables, including behavioral data, so that it can provide search, recommendation, and merchandising services to its global customer base.

Internet of Things / Cloud Computing

The third computing trend currently in progress is to build a global wireless network that provides connectivity at such low cost that we can assume ubiquitous connectivity in our near-term future, even for simple devices like an occupancy sensor. This trend works well in conjunction with Big Data - we now generate even more raw data for neural network training.

But IoT also works in reverse, pushing a powerful Artificial Intelligence into very simple network-connected devices. Amazon’s Echo device is a great example of this. Without network access, Alexa is a dumb Bluetooth speaker. With network access, she can answer a range of questions, control your household electronics, even order pizza! Amazon Echo devices sell for as little as $49, yet they can recognize conversational speech and provide sassy answers to many of your questions. How is that possible? These devices are simple (and cheap) data conduits – all the real processing is performed in the cloud at a remote Amazon data center. Cloud computing has become so cheap that Amazon is happy to perform the processing for free – in return for the intelligence (data) gained so that it can provide you an enticing product offer the next time you log into its website.

The Golden Age

Together, these three trends (GPU, Big Data, IoT) make it improbable that we will enter another AI winter period. AI is here to stay, but more innovation is required. Neural networks are great at pattern matching to solve problems such as vision processing, fraud detection, language translation, speech recognition, etc. But they are useless without training data and cannot handle new, never-before-seen situations. Machines can imitate, while humans improvise and innovate.

Last year, IBM’s Watson was credited with saving a woman’s life by diagnosing a rare form of leukemia. In ten short minutes, Watson cross-referenced the patient information with its training data of 20 million cancer research papers and made the proper diagnosis. Human doctors, by contrast, had been stumped by the patient’s symptoms for months, no doubt trying to diagnose more common ailments from their limited experiences.

In Florida, a Tesla running on Autopilot hurtled towards an intersection where a large tractor trailer was entering from a perpendicular angle. There was a lot of lighting interference, and this particular scenario had not been encountered before. The neural network took the closest match from its training database – concluding that it was approaching an overhead billboard. It had been taught to ignore such billboards, so the car continued at full speed, resulting in a disastrous collision. Would a human driver have made the same mistake, or perhaps slowed down and focused more effort on recognizing the object in question?

AI is here to stay and in the next ten years we will continue to see surprising and interesting progress – but at other times it will be disappointing and even shocking.

-

2026

- Jan 15, 2026 CES 2026: The Chinese Electronics Show?

-

2025

- Dec 3, 2025 FOMO and AI

- Sep 15, 2025 Year of the Agent

- Jun 10, 2025 Four Takeaways from Stanford's Annual AI Report

- Mar 28, 2025 Out-Innovated - Part 2

-

2024

- Dec 17, 2024 Is OpenAI the Next Webvan?

- Oct 4, 2024 What Happened to the Singularity?

- Jun 25, 2024 The VC Void in Germany

-

2023

- Dec 7, 2023 A Big Challenge for AI... And It's Not Technical

- Oct 4, 2023 About That Good Idea...

- Aug 3, 2023 Are we in an Innovation Winter and is ChatGPT to Blame?

- May 26, 2023 How to Build a Growth Factory

- Mar 22, 2023 ChatGPT and the Pareto Principle

-

2022

- Nov 8, 2022 Disrupted!

-

2021

- Apr 5, 2021 Irrational Exuberance

- Feb 11, 2021 What You (May Have) Missed...

-

2020

- Dec 3, 2020 State of Seed Investor Landscape 2020

- Aug 7, 2020 Out-Innovated

- Jul 13, 2020 Are Listening Posts Underrated?

- Apr 8, 2020 The State of Innovation in Silicon Valley: 2021

- Apr 8, 2020 CES & The Emerging Trends of 2020

- Apr 8, 2020 Our AI Overlords Are Here – And We Feel Fine

-

2019

- Jul 8, 2019 The New Dinosaurs

- May 30, 2019 Peak Silicon Valley?

-

2018

- Dec 21, 2018 Subscription Hallucination

- Sep 26, 2018 Scooter Madness

- Jun 8, 2018 Is Innovation Best Suited For the Young?

- Apr 16, 2018 Six Years Inside the Automotive Innovation Pipeline: What I Learned

- Jan 4, 2018 So.. You Want to Launch a Consumer Hardware Startup?

-

2017

- Nov 28, 2017 Wearables Meet Air

- Jul 26, 2017 Learning to Sell

- May 3, 2017 Is Virtual Reality Going the Way of 3D TV?

- Mar 3, 2017 Traction: the Elixir of Life

- Feb 9, 2017 Artificial Intelligence: Back to the Future?

-

2015

- Sep 30, 2015 Silicon Valley Is Not the Solution to Your Problem

- Aug 11, 2015 The Multi-Dimensional Startup

- Feb 6, 2015 Wearables: What's next?

-

2014

- Oct 15, 2014 Startup Burn Rate: Simmer or Meteor?

- Aug 27, 2014 5 Surprises About Internet of Things

- Jun 19, 2014 How to Talk to Corporates

- Apr 30, 2014 How to Talk to Startups

- Mar 11, 2014 Avoid the Corporate Disease

- Feb 11, 2014 The Corporate Disease

- Jan 28, 2014 Founders vs. Owners

- Jan 10, 2014 CES at the Fringe

- Jan 3, 2014 Silicon Valley Lite

-

2013

- Dec 17, 2013 How Corporates are Plugging In

- Dec 10, 2013 Can't We All Get Along?